The Media Capture and Streams API (aka MediaStream API) allows you to record audio from a user’s microphone, then get the recorded audio or media elements as tracks. You can then either play these tracks straight after recording them, or upload the media to your server.

In this tutorial, we’ll create a website that will use the Media Streams API to allow the user to record something, then upload the recorded audio to the server to be saved. The user will also be able to see and play all the uploaded recordings.

You can find the full code for this tutorial in this GitHub Repository.

Setting Up The Server

We’ll first start by creating a Node.js and Express server. So firstly make sure to download and install Node.js if you don’t have it on your machine.

Create a directory

Create a new directory that will hold the project, and change to that directory:

mkdir recording-tutorial

cd recording-tutorial

Initialize the project

Then, initialize the project with npm:

npm init -y

The option -y creates package.json with the default values.

Install the dependencies

Next, we’ll install Express for the server we’re creating and nodemon to restart the server when there are any changes:

npm i express nodemon

Create the Express server

We can start now by creating a simple server. Create index.js in the root of the project with the following content:

const path = require('path');

const express = require('express');

const app = express();

const port = process.env.PORT || 3000;

app.use(express.static('public/assets'));

app.listen(port, () => {

console.log(`App listening at http://localhost:${port}`);

});

This creates a server that will run on port 3000 unless a port is set in the environment, and it exposes a directory public/assets — which we’ll create soon — that will hold JavaScript and CSS files and images.

Add a script

Finally, add a start script under scripts in package.json:

"scripts": {

"start": "nodemon index.js"

},

Start the web server

Let’s test our server. Run the following to start the server:

npm start

And the server should start at port 3000. You can try accessing it on localhost:3000, but you’ll see a message saying “Cannot GET /” since we don’t have any routes defined yet.

Creating the Recording Page

Next, we’ll create the page that will be the main page of the website. The user will use this page to record and view and play recordings.

Create the public directory, and inside that create an index.html file with the following content:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta http-equiv="X-UA-Compatible" content="IE=edge">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Record</title>

<link href="https://cdn.jsdelivr.net/npm/bootstrap@5.1.0/dist/css/bootstrap.min.css" rel="stylesheet"

integrity="sha384-KyZXEAg3QhqLMpG8r+8fhAXLRk2vvoC2f3B09zVXn8CA5QIVfZOJ3BCsw2P0p/We" crossorigin="anonymous">

<link href="/css/index.css" rel="stylesheet" />

</head>

<body class="pt-5">

<div class="container">

<h1 class="text-center">Record Your Voice</h1>

<div class="record-button-container text-center mt-5">

<button class="bg-transparent border btn record-button rounded-circle shadow-sm text-center" id="recordButton">

<img src="/images/microphone.png" alt="Record" class="img-fluid" />

</button>

</div>

</div>

</body>

</html>

This page uses Bootstrap 5 for styling. For now, the page just shows a button that the user can use for recording.

Note that we’re using an image for the microphone. You can download the icon on Iconscout, or you can use the modified version in the GitHub repository.

Download the icon and place it inside public/assets/images with the name microphone.png.

Adding styles

We’re also linking the stylesheet index.css, so create an public/assets/css/index.css file with the following content:

.record-button {

height: 8em;

width: 8em;

border-color: #f3f3f3 !important;

}

.record-button:hover {

box-shadow: 0 .5rem 1rem rgba(0,0,0,.15)!important;

}

Creating the route

Finally, we just need to add the new route in index.js. Add the following before app.listen:

app.get('/', (req, res) => {

res.sendFile(path.join(__dirname, 'public/index.html'));

});

If the server isn’t already running, start the server with npm start. Then go to localhost:3000 in your browser. You’ll see a record button.

The button, for now, does nothing. We’ll need to bind a click event that will trigger the recording.

Create a public/assets/js/record.js file with the following content:

//initialize elements we'll use

const recordButton = document.getElementById('recordButton');

const recordButtonImage = recordButton.firstElementChild;

let chunks = []; //will be used later to record audio

let mediaRecorder = null; //will be used later to record audio

let audioBlob = null; //the blob that will hold the recorded audio

We’re initializing the variables we’ll use later. Then create a record function, which will be the event listener to the click event on recordButton:

function record() {

//TODO start recording

}

recordButton.addEventListener('click', record);

We’re also attaching this function as an event listener to the record button.

Media recording

In order to start recording, we’ll need to use the mediaDevices.getUserMedia() method.

This method allows us to obtain a stream and record the audio and/or video of the user only once the user provides permission for the website to do that. The getUserMedia method allows us to access local input devices.

getUserMedia accepts as a parameter an object of MediaStreamConstraints, which comprises a set of constraints that specify what are the expected media types in the stream we’ll obtain from getUserMedia. These constraints can be either audio and video with Boolean values.

If the value is false, it means we’re not interested in accessing this device or recording this media.

getUserMedia returns a promise. If the user allows the website to record, the promise’s fulfillment handler receives a MediaStream object which we can use to media capture video or audio streams of the user.

Media capture and streams

To use MediaStream API objects to capture media tracks, we need to use the MediaRecorder interface. We’ll need to create a new object of the interface which accepts the MediaStream object in the constructor and allows us to control the recording easily through its methods.

Inside the record function, add the following:

//check if browser supports getUserMedia

if (!navigator.mediaDevices || !navigator.mediaDevices.getUserMedia) {

alert('Your browser does not support recording!');

return;

}

// browser supports getUserMedia

// change image in button

recordButtonImage.src = `/images/${mediaRecorder && mediaRecorder.state === 'recording' ? 'microphone' : 'stop'}.png`;

if (!mediaRecorder) {

// start recording

navigator.mediaDevices.getUserMedia({

audio: true,

})

.then((stream) => {

mediaRecorder = new MediaRecorder(stream);

mediaRecorder.start();

mediaRecorder.ondataavailable = mediaRecorderDataAvailable;

mediaRecorder.onstop = mediaRecorderStop;

})

.catch((err) => {

alert(`The following error occurred: ${err}`);

// change image in button

recordButtonImage.src = '/images/microphone.png';

});

} else {

// stop recording

mediaRecorder.stop();

}

Browser support

We’re first checking whether navigator.mediaDevices and navigator.mediaDevices.getUserMedia are defined, since there are browsers like Internet Explorer, Chrome on Android, or others that don’t support it.

Furthermore, using getUserMedia requires secure websites, which means either a page loaded using HTTPS, file://, or from localhost. So, if the page isn’t loaded securely, mediaDevices and getUserMedia will be undefined.

Start recording

If the condition is false (that is, both mediaDevices and getUserMedia are supported), we’re first changing the image of the recording button to stop.png, which you can download from Iconscout or the GitHub repository and place it in public/assets/images.

Then, we’re checking if mediaRecorder — which we defined at the beginning of the file — is or isn’t null.

If it’s null, it means there’s no ongoing recording. So, we get a MediaStream instance to start recording using getUserMedia.

We’re passing it an object with only the key audio and value true, as we’re just recording the audio.

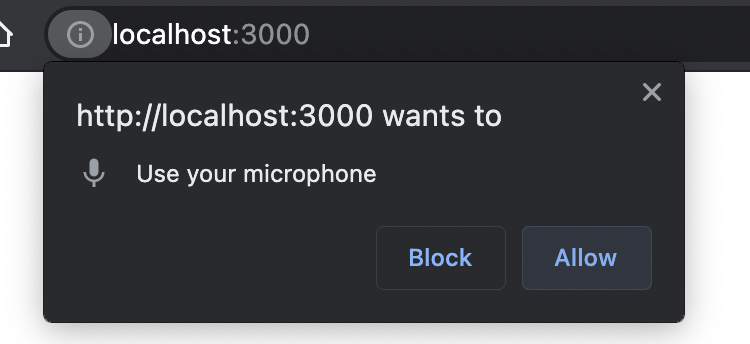

This is where the browser prompts the user to allow the website to access the microphone. If the user allows it, the code inside the fulfillment handler will be executed:

mediaRecorder = new MediaRecorder(stream);

mediaRecorder.start();

mediaRecorder.ondataavailable = mediaRecorderDataAvailable;

mediaRecorder.onstop = mediaRecorderStop;

Here we’re creating a new MediaRecorder, assigning it to mediaRecorder which we defined at the beginning of the file.

We’re passing the constructor the stream received from getUserMedia. Then, we’re starting the recording using mediaRecorder.start().

Finally, we’re binding event handlers (which we’ll create soon) to two events, dataavailable and stop.

We’ve also added a catch handler in case the user doesn’t allow the website to access the microphone or any other exception that might be thrown.

Stop recording

This all occurs if the mediaRecorder is not null. If it’s null, it means that there’s an ongoing recording and the user is ending it. So, we’re using the mediaRecorder.stop() method to stop the recording:

} else {

//stop recording

mediaRecorder.stop();

}

Handle media recording events

Our code so far starts and stops the recording when the user clicks the record button. Next, we’ll add the event handlers for dataavailable and stop.

On data available

The dataavailable event is triggered either when a full recording is done, or based on an optional parameter timeslice being passed to mediaRecorder.start() to indicate the number of milliseconds that this event should be triggered. Passing the timeslice allows slicing the recording and obtaining it in chunks.

Create the mediaRecorderDataAvailable function, which will handle the dataavailable event just by adding the Blob audio track in the received BlobEvent parameter to the chunks array, which we defined at the beginning of the file:

function mediaRecorderDataAvailable(e) {

chunks.push(e.data);

}

The chunk will be an array of audio tracks of the user’s recording.

On stop

Before we create the mediaRecorderStop, which will handle the stop event, let’s first add the HTML element container that will hold the recorded audio with the buttons Save and Discard.

Add the following in public/index.html just before the closing </body> tag:

<div class="recorded-audio-container mt-5 d-none flex-column justify-content-center align-items-center"

id="recordedAudioContainer">

<div class="actions mt-3">

<button class="btn btn-success rounded-pill" id="saveButton">Save</button>

<button class="btn btn-danger rounded-pill" id="discardButton">Discard</button>

</div>

</div>

Then, at the beginning of public/assets/js/record.js, add a variable that will be a Node instance of the #recordedAudioContainer element:

const recordedAudioContainer = document.getElementById('recordedAudioContainer');

We can now implement mediaRecorderStop. This function will first remove any audio element that was previously recorded and not saved, create a new audio media element, set the src to be the Blob of the recorded stream, and show the container:

function mediaRecorderStop () {

//check if there are any previous recordings and remove them

if (recordedAudioContainer.firstElementChild.tagName === 'AUDIO') {

recordedAudioContainer.firstElementChild.remove();

}

//create a new audio element that will hold the recorded audio

const audioElm = document.createElement('audio');

audioElm.setAttribute('controls', ''); //add controls

//create the Blob from the chunks

audioBlob = new Blob(chunks, { type: 'audio/mp3' });

const audioURL = window.URL.createObjectURL(audioBlob);

audioElm.src = audioURL;

//show audio

recordedAudioContainer.insertBefore(audioElm, recordedAudioContainer.firstElementChild);

recordedAudioContainer.classList.add('d-flex');

recordedAudioContainer.classList.remove('d-none');

//reset to default

mediaRecorder = null;

chunks = [];

}

In the end, we’re resetting mediaRecorder and chunks to their initial values to handle the next recordings. With this code, our website should be able to record the audio, and when the user stops, it allows them to play the recorded audio.

The last thing we need to do is link to record.js in index.html. Add the script at the end of the body:

<script src="/js/record.js"></script>

Test recording

Let’s see it now. Go to localhost:3000 in your browser and click on the record button. You’ll be asked to allow the website to use the microphone.

Make sure you’re loading the website either on localhost or an HTTPS server even if you’re using a supported browser. MediaDevices and getUserMedia are not available under other conditions.

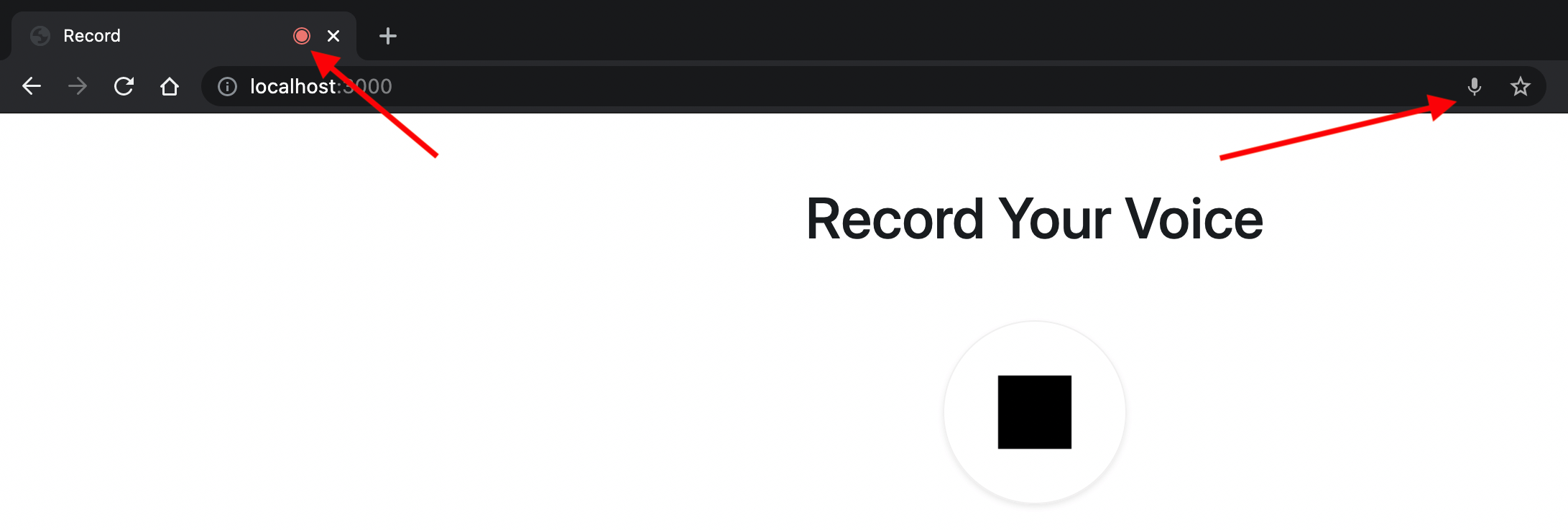

Click on Allow. The microphone image will then change to the stop image. Also, you should see a recording icon in the address bar based on your browser. This indicates that the microphone is currently accessed by the website.

Try recording for a few seconds. Then click on the stop button. The image of the button will change back to the microphone image, and the audio player will show up with two buttons — Save and Discard.

Next, we’ll implement the click events of the Save and Discard buttons. The Save button should upload the audio to the server, and the Discard button should remove it.

Discard click event handler

We’ll first implement the event handler for the Discard button. Clicking this button should first show the user a prompt to confirm that they want to discard the recording. Then, if the user confirms, it will remove the audio player and hide the buttons.

Add the variable that will hold the Discard button to the beginning of public/assets/js/record.js:

const discardAudioButton = document.getElementById('discardButton');

Then, add the following to the end of the file:

function discardRecording () {

//show the user the prompt to confirm they want to discard

if (confirm('Are you sure you want to discard the recording?')) {

//discard audio just recorded

resetRecording();

}

}

function resetRecording () {

if (recordedAudioContainer.firstElementChild.tagName === 'AUDIO') {

//remove the audio

recordedAudioContainer.firstElementChild.remove();

//hide recordedAudioContainer

recordedAudioContainer.classList.add('d-none');

recordedAudioContainer.classList.remove('d-flex');

}

//reset audioBlob for the next recording

audioBlob = null;

}

//add the event listener to the button

discardAudioButton.addEventListener('click', discardRecording);

You can now try recording something, then clicking the Discard button. The audio player will be removed and the buttons hidden.

Upload to Server

Save click event handler

Now, we’ll implement the click handler for the Save button. This handler will upload the audioBlob to the server using the Fetch API when the user clicks the Save button.

If you’re unfamiliar with the Fetch API, you can learn more in our “Introduction to the Fetch API” tutorial.

Let’s start by creating an uploads directory in the project root:

mkdir uploads

Then, at the beginning of record.js, add a variable that will hold the Save button element:

const saveAudioButton = document.getElementById('saveButton');

Then, at the end, add the following:

function saveRecording () {

//the form data that will hold the Blob to upload

const formData = new FormData();

//add the Blob to formData

formData.append('audio', audioBlob, 'recording.mp3');

//send the request to the endpoint

fetch('/record', {

method: 'POST',

body: formData

})

.then((response) => response.json())

.then(() => {

alert("Your recording is saved");

//reset for next recording

resetRecording();

//TODO fetch recordings

})

.catch((err) => {

console.error(err);

alert("An error occurred, please try again later");

//reset for next recording

resetRecording();

})

}

//add the event handler to the click event

saveAudioButton.addEventListener('click', saveRecording);

Notice that, once the recording is uploaded, we’re using resetRecording to reset the audio for the next recording. Later, we’ll fetch all the recordings to show them to the user.

Create API Endpoint

We need to implement the API endpoint now. The endpoint will upload the audio to the uploads directory.

To handle file upload easily in Express, we’ll use the library Multer. Multer provides a middleware to handle the file upload.

Run the following to install it:

npm i multer

Then, in index.js, add the following to the beginning of the file:

const fs = require('fs');

const multer = require('multer');

const storage = multer.diskStorage({

destination(req, file, cb) {

cb(null, 'uploads/');

},

filename(req, file, cb) {

const fileNameArr = file.originalname.split('.');

cb(null, `${Date.now()}.${fileNameArr[fileNameArr.length - 1]}`);

},

});

const upload = multer({ storage });

We declared storage using multer.diskStorage, which we’re configuring to store files in the uploads directory, and we’re saving the files based on the current timestamp with the extension.

Then, we declared upload, which will be the middleware that will upload files.

Next, we want to make files inside the uploads directory publicly accessible. So, add the following before app.listen:

app.use(express.static('uploads'));

Finally, we’ll create the upload endpoint. This endpoint will just use the upload middleware to upload the audio and return a JSON response:

app.post('/record', upload.single('audio'), (req, res) => res.json({ success: true }));

The upload middleware will handle the file upload. We just need to pass the field name of the file we’re uploading to upload.single.

Please note that, normally, you need to perform validation on files and make sure that the correct, expected file types are being uploaded. For simplicity’s sake, we’re omitting that in this tutorial.

Test upload

Let’s test it out. Go to localhost:3000 in your browser again, record something, and click the Save button.

The request will be sent to the endpoint, the file will be uploaded, and an alert will be shown to the user to inform them the recording is saved.

You can confirm that the audio is actually uploaded by checking the uploads directory at the root of your project. You should find an MP3 audio file there.

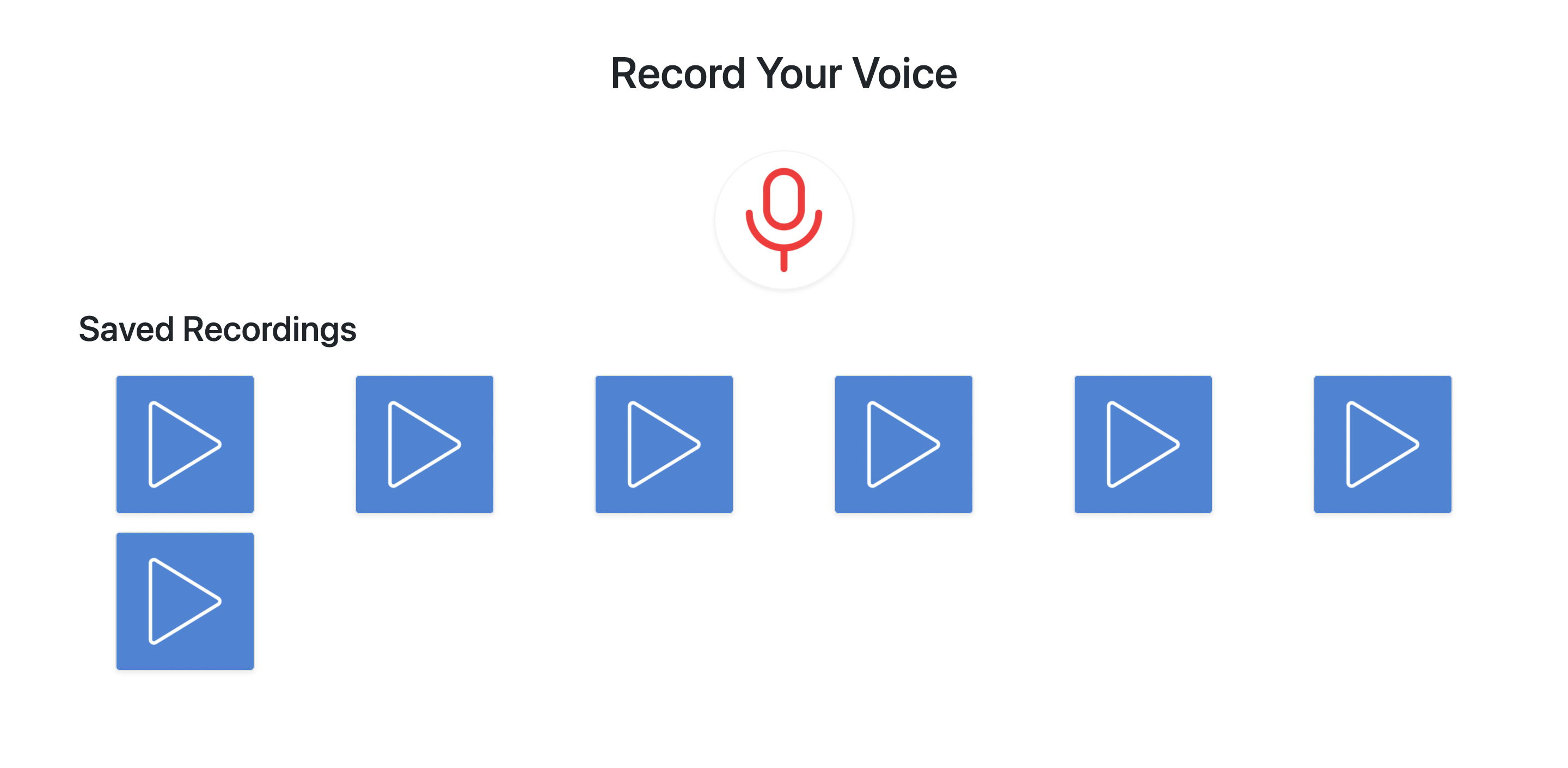

Show Recordings

Create an API endpoint

The last thing we’ll do is show all recordings to the user so they can play them.

First, we’ll create the endpoint that will be used to get all the files. Add the following before app.listen in index.js:

app.get('/recordings', (req, res) => {

let files = fs.readdirSync(path.join(__dirname, 'uploads'));

files = files.filter((file) => {

// check that the files are audio files

const fileNameArr = file.split('.');

return fileNameArr[fileNameArr.length - 1] === 'mp3';

}).map((file) => `/${file}`);

return res.json({ success: true, files });

});

We’re just reading files inside the uploads directory, filtering them to get only the mp3 files, and appending a / to each file name. Finally, we’re returning a JSON object with the files.

Add a recordings container element

Next, we’ll add an HTML element that will be the container of the recordings we’ll show. Add the following at the end of the body before the record.js script:

<h2 class="mt-3">Saved Recordings</h2>

<div class="recordings row" id="recordings">

</div>

Fetch files from the API

Also add to the beginning of record.js the variable that will hold the #recordings element:

const recordingsContainer = document.getElementById('recordings');

Then, we’ll add a fetchRecordings function that will call the endpoint we created earlier, and will then, with the createRecordingElement function, render the elements that will be the audio players.

We’ll also add a playRecording event listener for the click event on the button that will play the audio.

Add the following at the end of record.js:

function fetchRecordings () {

fetch('/recordings')

.then((response) => response.json())

.then((response) => {

if (response.success && response.files) {

//remove all previous recordings shown

recordingsContainer.innerHTML = '';

response.files.forEach((file) => {

//create the recording element

const recordingElement = createRecordingElement(file);

//add it the the recordings container

recordingsContainer.appendChild(recordingElement);

})

}

})

.catch((err) => console.error(err));

}

//create the recording element

function createRecordingElement (file) {

//container element

const recordingElement = document.createElement('div');

recordingElement.classList.add('col-lg-2', 'col', 'recording', 'mt-3');

//audio element

const audio = document.createElement('audio');

audio.src = file;

audio.onended = (e) => {

//when the audio ends, change the image inside the button to play again

e.target.nextElementSibling.firstElementChild.src = 'images/play.png';

};

recordingElement.appendChild(audio);

//button element

const playButton = document.createElement('button');

playButton.classList.add('play-button', 'btn', 'border', 'shadow-sm', 'text-center', 'd-block', 'mx-auto');

//image element inside button

const playImage = document.createElement('img');

playImage.src = '/images/play.png';

playImage.classList.add('img-fluid');

playButton.appendChild(playImage);

//add event listener to the button to play the recording

playButton.addEventListener('click', playRecording);

recordingElement.appendChild(playButton);

//return the container element

return recordingElement;

}

function playRecording (e) {

let button = e.target;

if (button.tagName === 'IMG') {

//get parent button

button = button.parentElement;

}

//get audio sibling

const audio = button.previousElementSibling;

if (audio && audio.tagName === 'AUDIO') {

if (audio.paused) {

//if audio is paused, play it

audio.play();

//change the image inside the button to pause

button.firstElementChild.src = 'images/pause.png';

} else {

//if audio is playing, pause it

audio.pause();

//change the image inside the button to play

button.firstElementChild.src = 'images/play.png';

}

}

}

Notice that, inside playRecording function, we’re checking if the audio is playing using audio.paused, which will return true if the audio isn’t playing at the moment.

We’re also using play and pause icons that will show inside each recording. You can get these icons from Iconscout or the GitHub repository.

We’ll use fetchRecordings when the page loads and when a new recording has been uploaded.

So, call the function at the end of record.js and inside the fulfillment handler in saveRecording in place of the TODO comment:

.then(() => {

alert("Your recording is saved");

//reset for next recording

resetRecording();

//fetch recordings

fetchRecordings();

})

Adding styles

The last thing we need to do is add some styling to the elements we are creating. Add the following to public/assets/css/index.css:

.play-button:hover {

box-shadow: 0 .5rem 1rem rgba(0,0,0,.15)!important;

}

.play-button {

height: 8em;

width: 8em;

background-color: #5084d2;

}

Test everything

It’s all ready now. Open the website on localhost:3000 in your browser, and if you uploaded any recordings before, you’ll see them now. You can also try uploading new ones and see the list get updated.

The user can now record their voice, save or discard them. The user can also view all uploaded recordings and play them.

Conclusion

Using the MediaStream API allows us to add media features for the user, such as recording audio. The MediaStream Web API also allows the recording of videos, taking screenshots, and more. Following the information given in this tutorial, along with useful tutorials provided by MDN and SitePoint, you’ll be able to add the whole range of other media functionalities to your website as well.

FAQs about the Mediastream API

The MediaStream API is a web platform API that provides a way to capture, manipulate, and stream audio and video in web applications.

The API allows developers to access multimedia streams from various sources, such as the user’s microphone or camera, and manipulate these streams through JavaScript.

Yes, the MediaStream API supports both audio and video streams. Developers can access and manipulate these streams separately or together.

Developers can use the getUserMedia method to prompt the user for permission to access their camera. This method returns a promise that resolves to a MediaStream object containing the video stream.

Yes, the MediaStream API can be used for screen sharing by capturing the user’s screen as a media stream. Keep in mind that some browsers may require additional permissions for screen sharing.

I am a full-stack developer passionate about learning something new every day, then sharing my knowledge with the community.